Work and Research Experience

3D Computer Vision for Robotic Bin Picking

Machine Learning Intern, More Inc

We used different modalities of in house 3D data to design custom lightweight, geometry-aware ML architectures optimized for real-time robotic tasks. This included developing custom PyTorch operators with learnable parameters, along with an interactive visualization dashboard for hyperparameter tuning and model evaluation.

We reduced model size by 99% compared to conventional CNNs on in-house 3D datasets using the geometry-aware design.

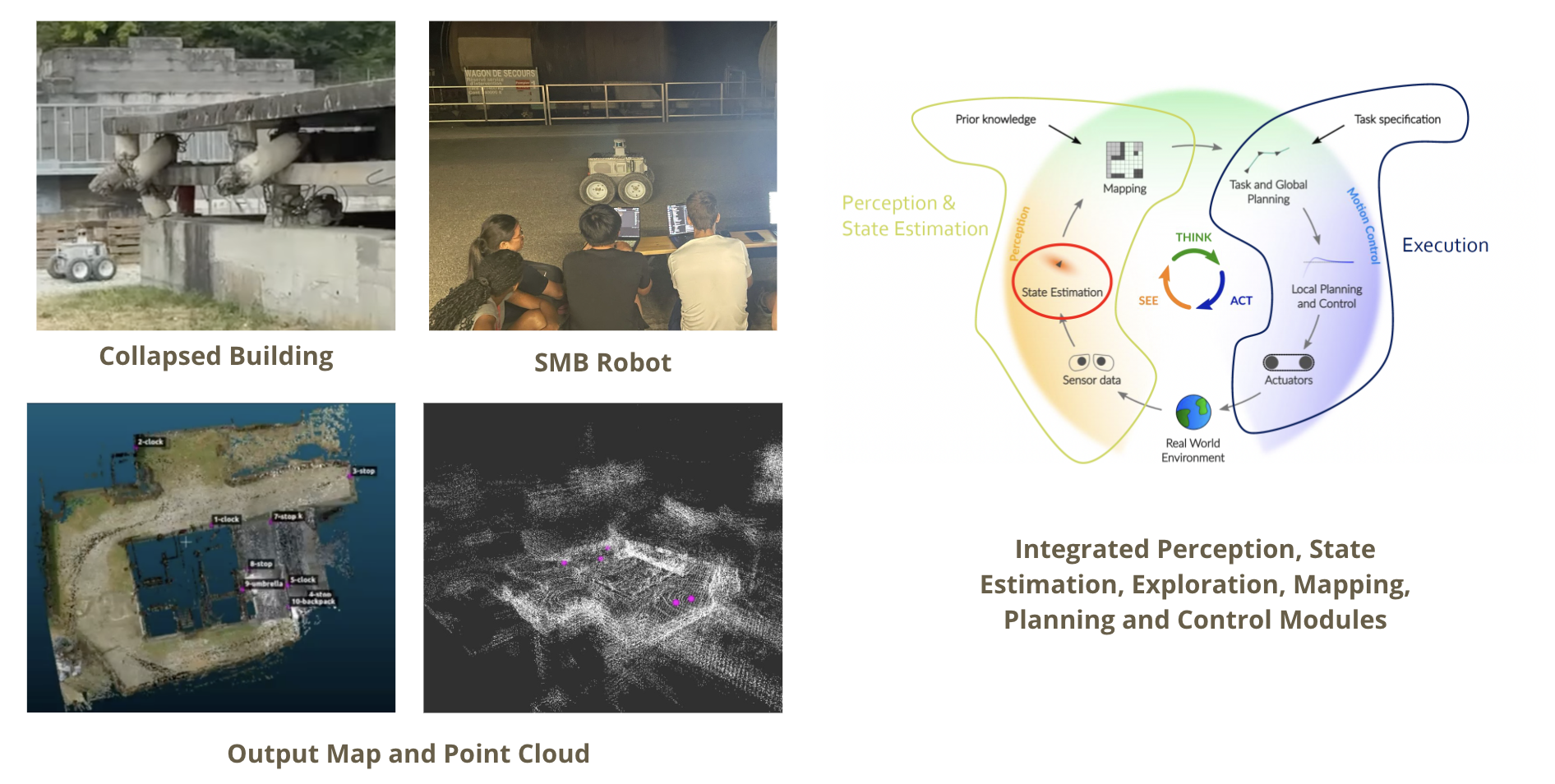

Search and Rescue Mapping with UGV

Robotics Summer School, ETH Zurich

This was part of the ETH Zürich Robotics Summer School with around 50 students from across the globe split into 8 teams. We worked on autonomous mobile robots at a Swiss military site with realistic search and rescue environments.

The program culminated with the search and rescue challenge. Our robot autonomously entered an unknown collapsed building, navigating and mapping the environment while detecting objects of interest. It completed the mapping in under 15 minutes, returning with a 3D point cloud map of the building and the locations of identified objects of interest.

Video Snippet from the ETH Robotics Summer School 2025 Aftermovie (Play with Audio)

Autonomous Surgical Robotic Arm using Imitation Learning

CHARM and IPRL Labs, Stanford University

I am exploring transformer based imitation learning algorithms (Action Chunking with Transformers - ACT architecture) to enable the autonomous manipulation of one of the da Vinci surgical robotic arm which assists the surgeon.

I developed a teleoperation system using the Phantom Omni haptic device to control the robotic arm and am collaborating with domain experts to build a data collection pipeline for surgical task demonstrations.

Click to play. The video presents both automated and semi-automated demonstrations of bimanual (two-handed) and tri-manual (three-handed) manipulation tasks.

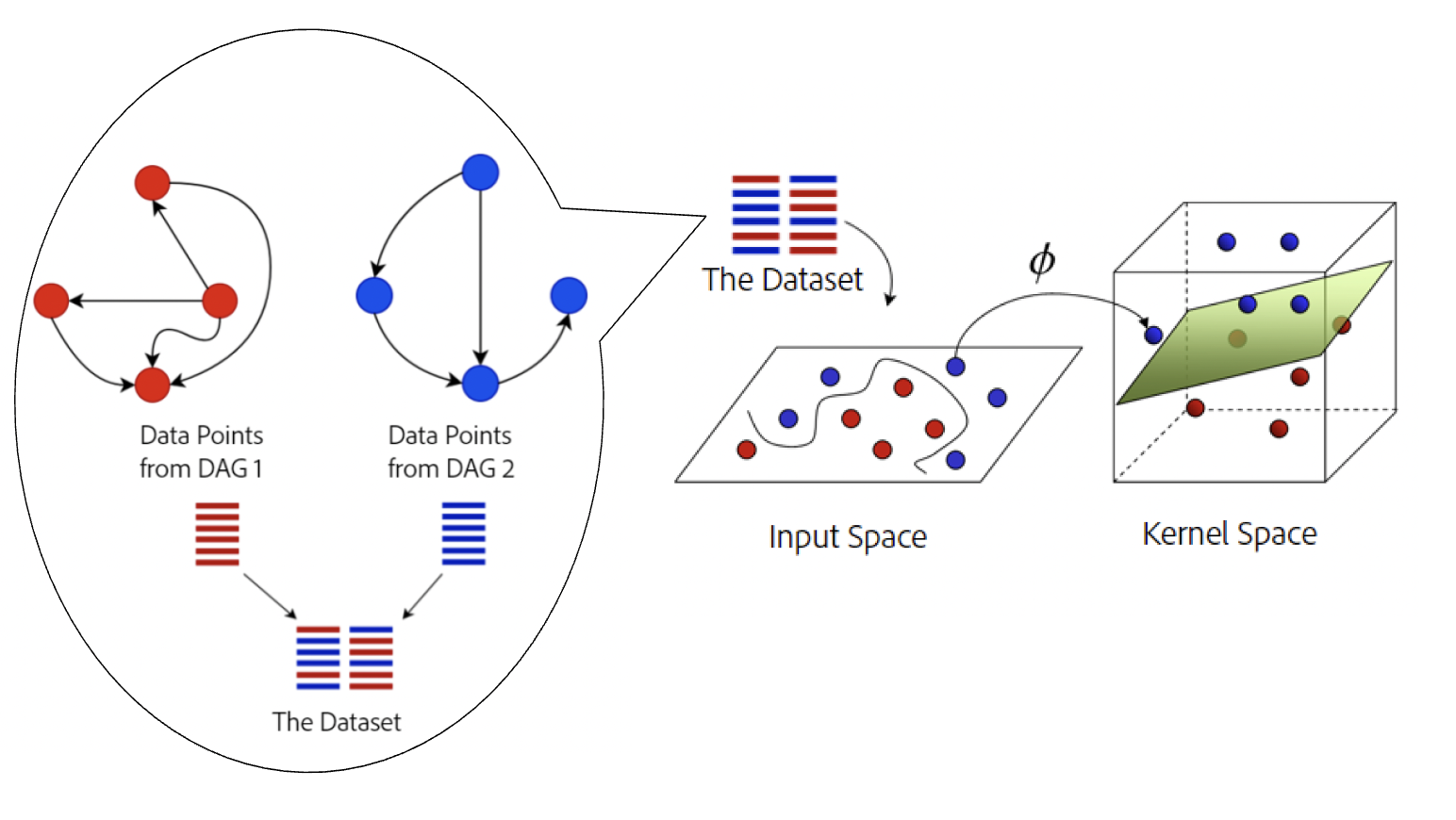

Machine Learning Intern | USPTO Patent Filed*

Adobe Research

I worked in the field of Causal Artificial Intelligence applied to customer clustering. We built a novel iterative clustering algorithm that leverages bayesian networks (represented as DAGs).

*We developed a patent from our work which was successfully filed at the US Patent and Trademark Office (USPTO).

Clustering Users by learning the underlying Causal Relationships

Improving Spatial Awareness of an Intelligent Prosthetic Arm using VLMs

Stanford University, California

As an MS researcher at ARMLab, Stanford University, I worked on a project that aims to process video feeds from multiple cost-effective cameras to detect objects of interest using VLMs and ensure safety of a prosthetic arm. I have developed a pipeline in ROS that enables 3D tracking of objects, visualized in RViz, as they transition across the fields of view of different cameras.

Human in the Loop, Safe Reinforcement Learning

Indian Institute of Technology, Madras

As a part of my dual degree thesis, I developed a human-in-the-loop safe reinforcement learning framework that ensures safety in both training and deployment.

The approach involves two steps: the first step is the utilization of expert human input to establish a Safe State Space (SSS) and a corresponding Conservative Safe Policy (CSP). In the second step, we used a modified version of the Deep Deterministic Policy Gradient algorithm which we called SafeDDPG augmented with a safety layer (built using SSS and CSP) that is learned by the agent during the training.

SafeDDPG results on two Safe RL Benchmark Environments. In the Pendulum environment, safety is defined as staying within 20 degrees. In the SafetyPointCircle environment, the bot earns high rewards for moving along the circle but must stay within the yellow walls.

Reactive Obstacle Avoidance using Depth Images and CBFs

Royal Institute of Technology, Sweden

In this work, we constructed a low level obstacle avoidance controller that does not rely on global localisation or pre-existing maps

while providing provable guarantees to safety in an unknown and uncertain environment.

I built a fully functioning pipeline in ROS that generates the safe sets from the depth images and a control barrier function (CBF) based controller

that uses these safe sets to generate the velocity commands.

The turtlebot navigates using the CBF based control outputs

Deep RL in Autonomous Cars

Hokkaido University, Japan

I worked on applying deep reinforcement learning in autonomous cars to optimize traffic flow in scenarios like signal intersections and highway merges.

I explored various state space encodings for traffic representation and implemented extensions to deep Q learning,

such as double Q learning, prioritized replay, dueling networks, and noisy nets.

Having conducted a thorough empirical analysis,

I showed that RL-based autonomous cars can reduce congestion time significantly compared to rule-based agents by 30%.

Traffic Flow Optimisation in the presence of an obstacle on on the road (Car 1 is an RL agent while the other cars are rule based and simulated)